2022-04-25 Skolotāju konference LiepU

https://playground.tensorflow.org

Derivative rules

Constant rule

Power rule

Exponent rule

Chain rule

Product rule

Quotient Rule

Reciprocal rule

SGD

MAE derivative

Linear function

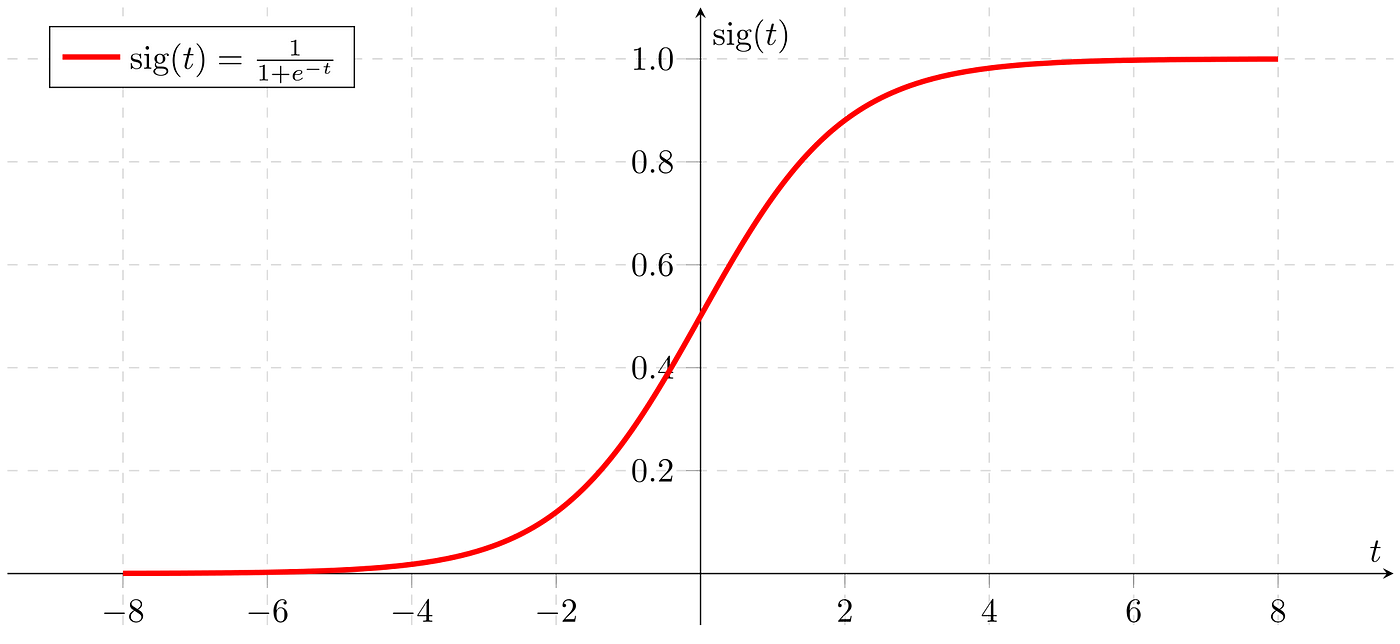

Sigmoid function

reciprocal rule = chain & power rule

exponent rule

import numpy as npimport matplotlib.pyplot as plt

x = np.array( [ [2002, 300_000], [2012, 100_000], [2018, 1_000], ])

y = np.array( [ [2000], [10_000], [30_000] ])

x_mean = np.mean(x, axis=0)x_std = np.std(x, axis=0)x = (x - x_mean) / x_stdprint(x.shape)

y_mean = np.mean(y, axis=0)y_std = np.std(y, axis=0)y = (y - y_mean) / y_std

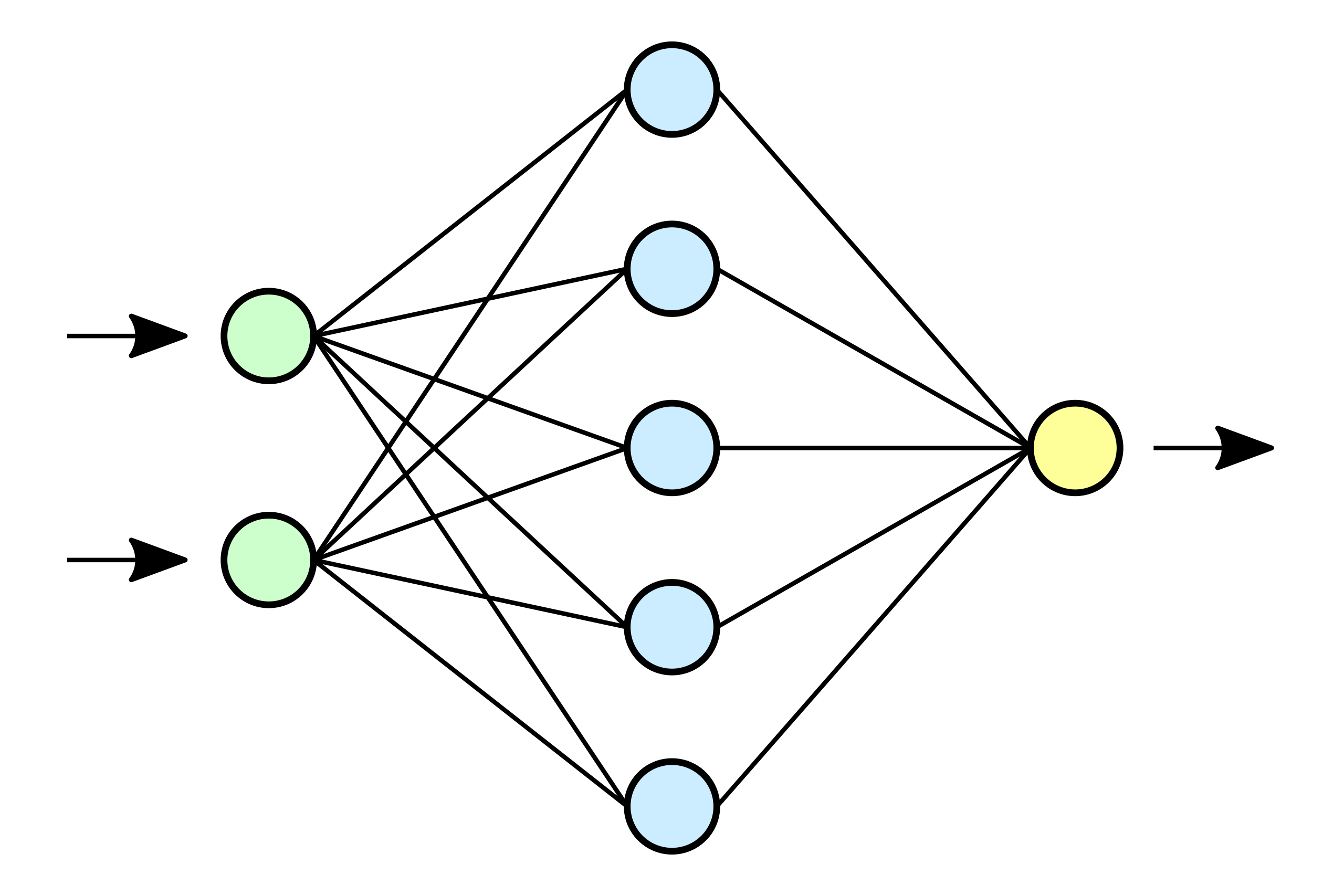

W_1 = np.ones((2, 4))b_1 = np.zeros((4, ))

W_2 = np.ones((4, 1))b_2 = np.zeros((1, ))

alpha = 0.1

losses = []for epoch in range(10): print(x) linear_1_w = W_1.T @ x[:, :, np.newaxis] linear_1 = linear_1_w[:, :, 0] + b_1 sigmoid_2 = 1 / (1 + np.exp(-linear_1)) linear_2_w = W_2.T @ sigmoid_2[:, :, np.newaxis] y_prim = linear_2_w[:, :, 0] + b_2

print(y) print(y_prim)

loss = np.mean(np.abs(y_prim - y)) #loss = np.mean((y - y_prim)** 2) losses.append(loss)

d_loss = (y_prim - y)/(np.abs(y_prim - y) + 1e-8) #d_loss = -2*(y - y_prim)

d_W_2 = sigmoid_2[:, :, np.newaxis] @ d_loss[:, :, np.newaxis] d_b_2 = 1 * d_loss

d_x_2 = W_2 @ d_loss[:, :, np.newaxis] d_x_2 = d_x_2[:, :, 0]

d_x_sigmoid = (np.exp(-linear_1)/(1 + np.exp(-linear_1))**2) d_x_sigmoid_chain = d_x_sigmoid * d_x_2

d_W_1 = x[:, :, np.newaxis] @ d_x_sigmoid_chain[:, np.newaxis, :] d_b_1 = 1 * d_x_sigmoid_chain.shape

W_1 = W_1 - np.mean(d_W_1, axis=0) * alpha b_1 = b_1 - np.mean(d_b_1, axis=0) * alpha

W_2 = W_2 - np.mean(d_W_2, axis=0) * alpha b_2 = b_2 - np.mean(d_b_2, axis=0) * alpha

plt.plot(losses)