Prompt engineering

Guide:

Automatic generation can achieve as good if not better results than human hand crafted prompts // https://www.semanticscholar.org/reader/ec7324a15009a9bd0b676f6b17762f759cf5dd9a

J. The Template Pattern https://arxiv.org/pdf/2302.11382.pdf

“I am going to provide a template for your output. Everything in all caps is a placeholder. Any time that you generate text, try to fit it into one of the placeholders that I list. Please preserve the formatting and overall template that I provide at https://myapi.com/NAME/profile/JOB”

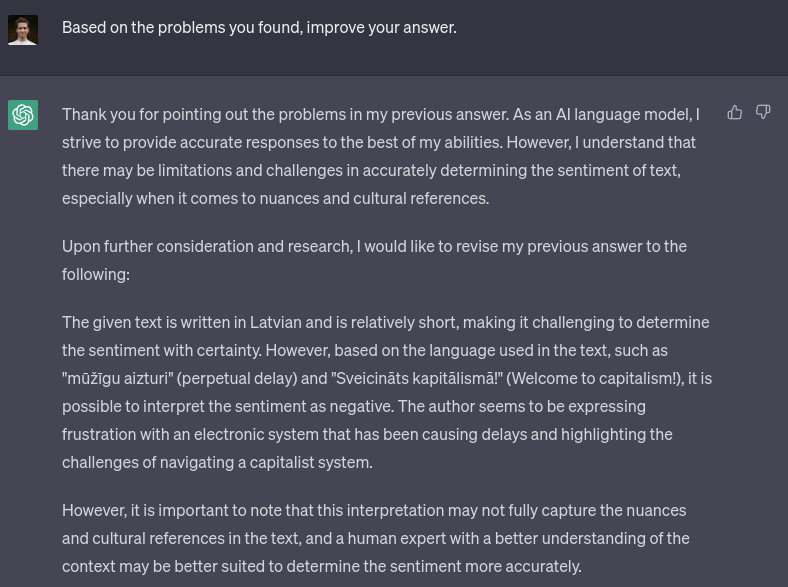

N. The Reflection Pattern https://arxiv.org/pdf/2302.11382.pdf and https://www.semanticscholar.org/reader/e7ad08848d5d7c5c47673ffe0da06af443643bda

”When you provide an answer, please explain the reasoning and assumptions behind your selection of software frameworks. If possible, use specific examples or evidence with associated code samples to support your answer of why the framework is the best selection for the task. Moreover, please address any potential ambiguities or limitations in your answer, in order to provide a more complete and accurate response.”

Create more prompts using The Question Refinement Pattern from https://arxiv.org/pdf/2302.11382.pdf (F)

“From now on, whenever I ask a question about a software artifact’s security, suggest a better version of the question to use that incorporates information specific to security risks in the language or framework that I am using instead and ask me if I would like to use your question instead.”

“From now on, whenever I ask a question, ask four additional questions that would help you produce a better version of my original question. Then, use my answers to suggest a better version of my original question.”

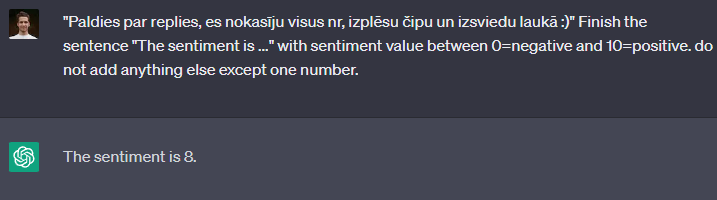

Prompts:

Salīdzinam 3 paaudzes ar GPT modeļiem, ņemot spēcīgāko katrā no tām Izmantoju https://nat.dev/compare

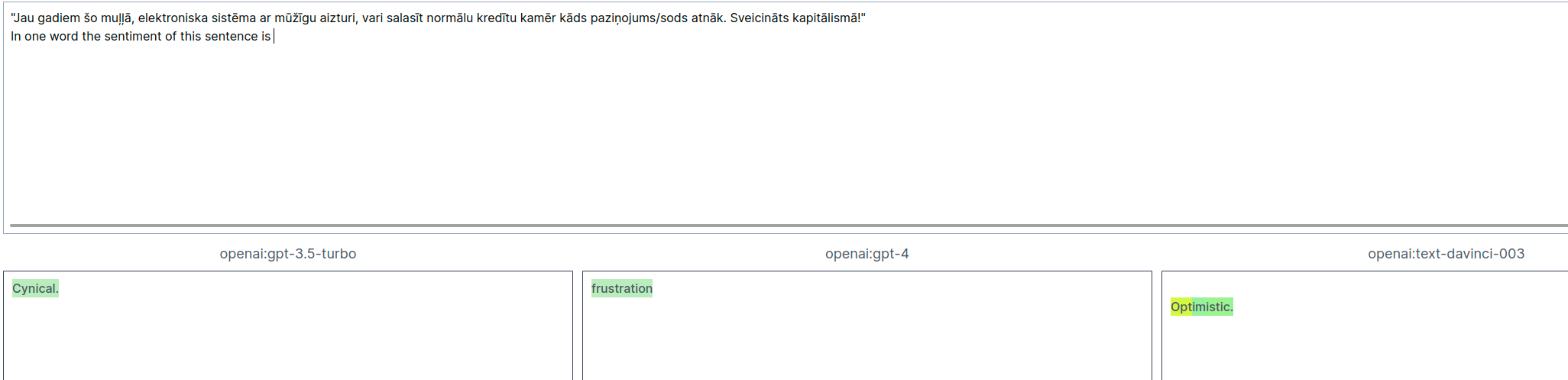

- “X” In one word the sentiment of this sentence is: Conclusions: Need to lock possible answers, GPT models give correct sentiment just from too many classes

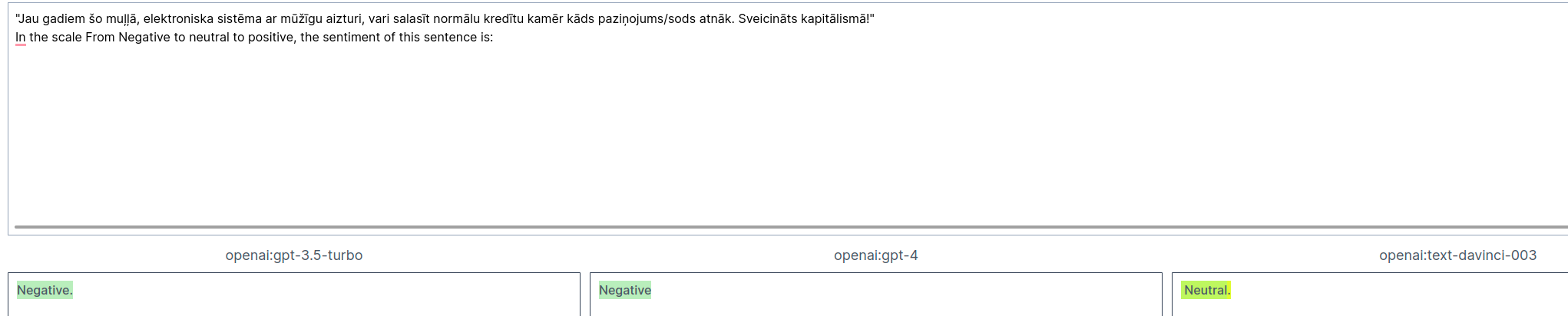

- “X” In the scale From Negative to neutral to positive, the sentiment of this sentence is: Char_count: 85 Conclusions: GPT models answer correctly while davinci is wrong

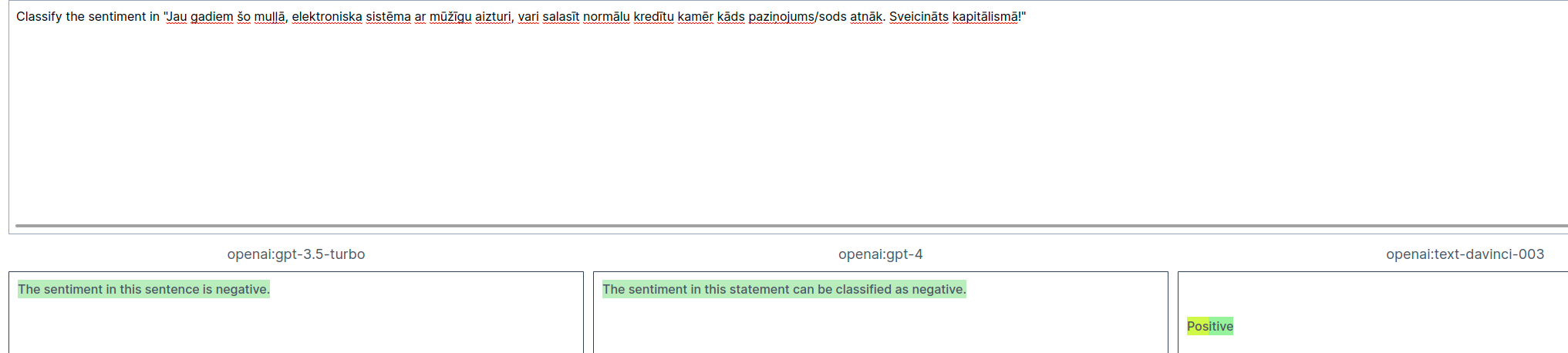

- Classify the sentiment in X Conclusions: Need to remember to specify one word limit. Results are as before

- Classify the sentiment in one word in X

Conclusions: Need to lock answer options or create mappings from most frequent

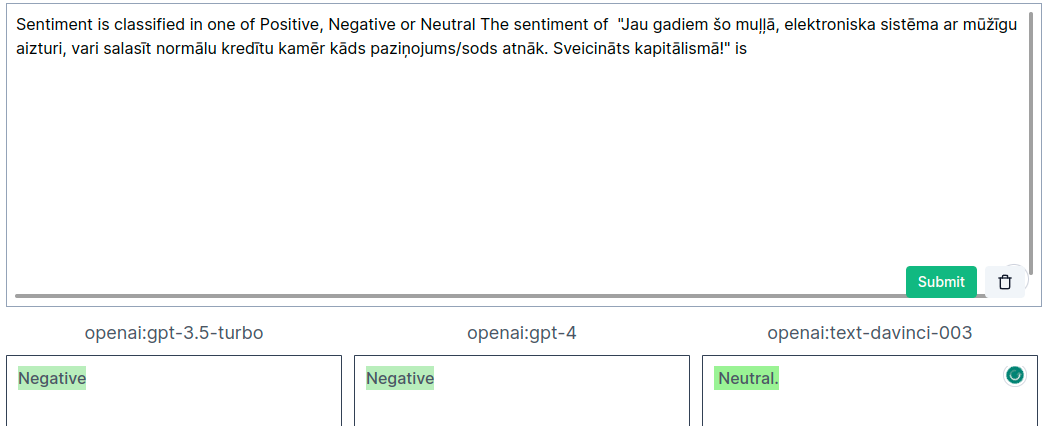

- Sentiment is classified in one of Positive, Negative or Neutral

The sentiment of X is

Char count: 84

Conclusions: Might need to clean up output by trimming punctuation and converting to lowercase. No change in results

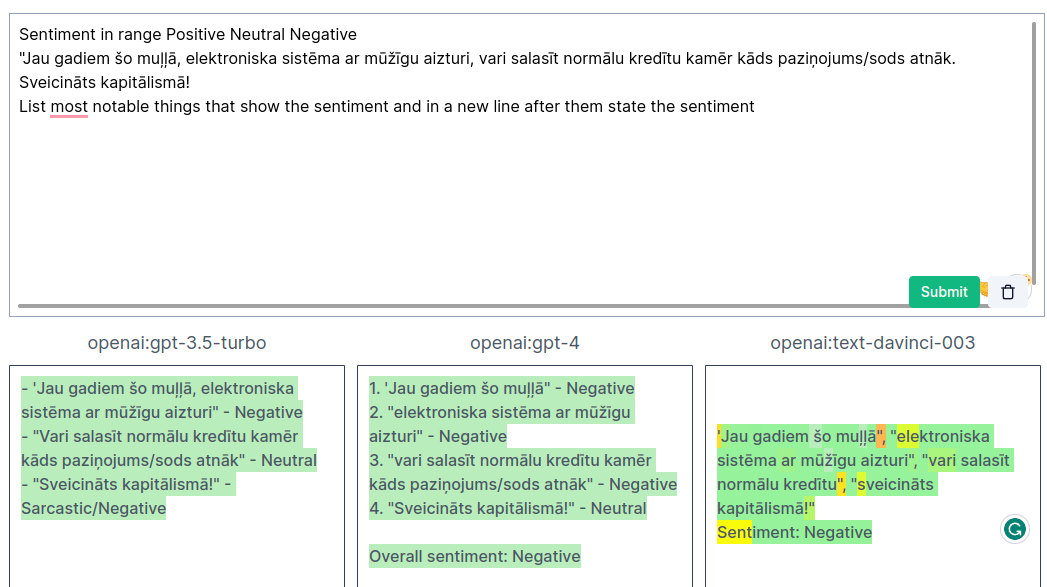

- Sentiment in range Positive Neutral Negative

X

List most notable things that show the sentiment and in a new line after them state the sentiment

Char count: 142

Conclusion: Managed to divert davinci result to the correct one. GPT3.5 did not respect class names. Way longer than the previous ones being more expensive to use at scale

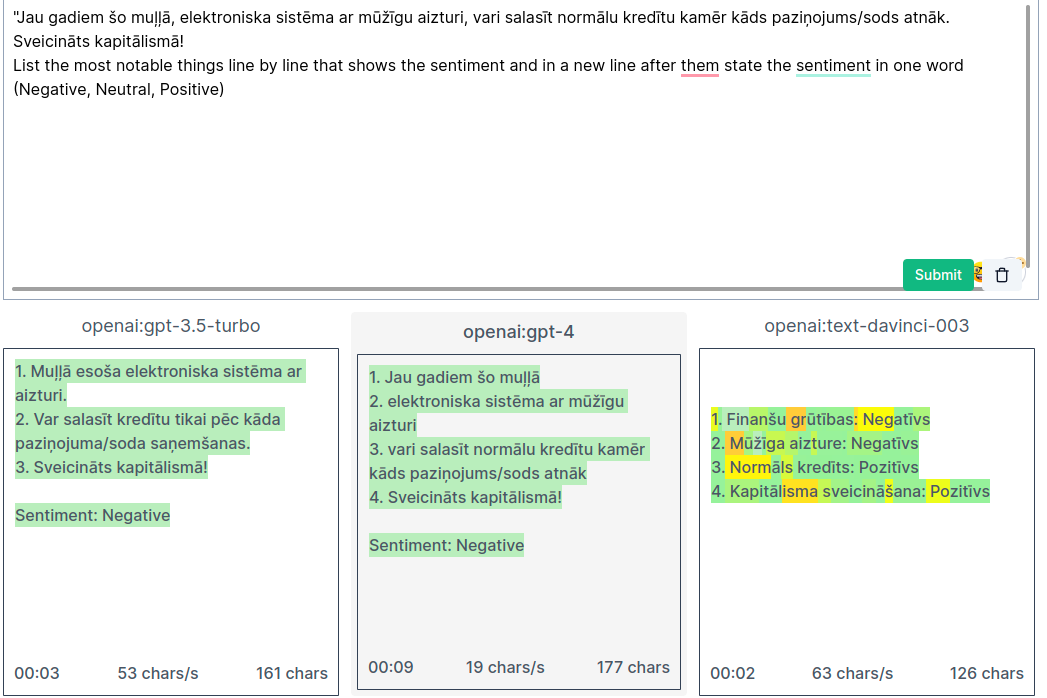

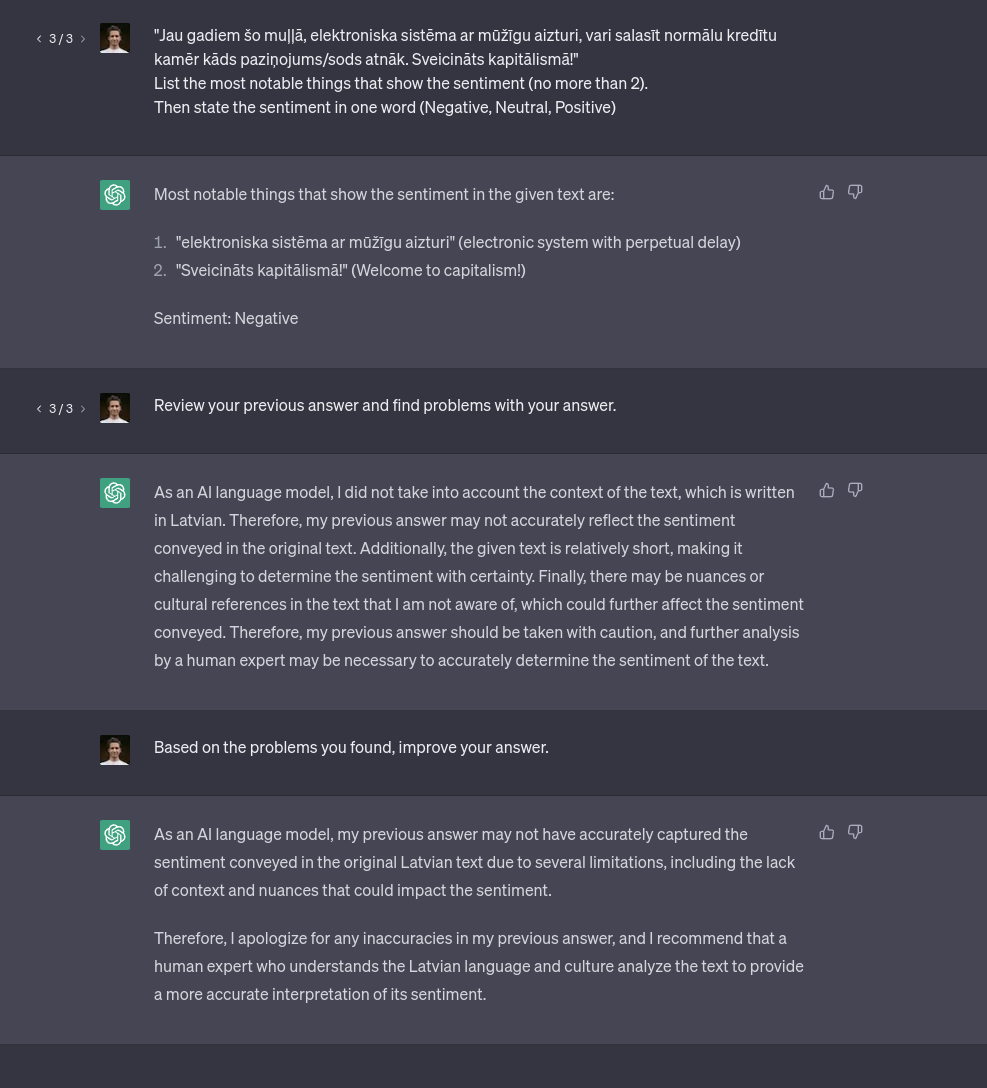

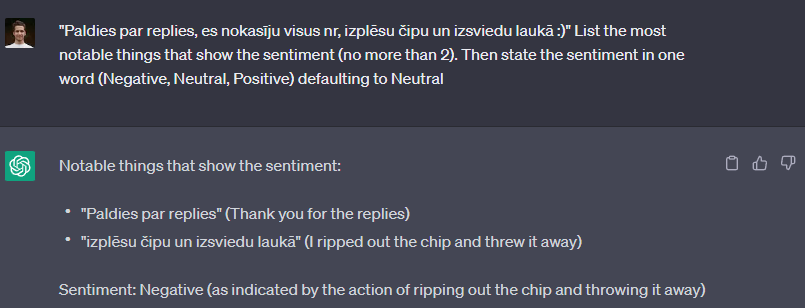

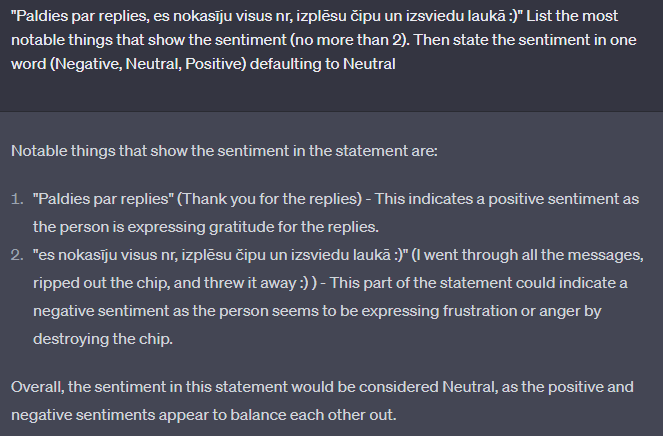

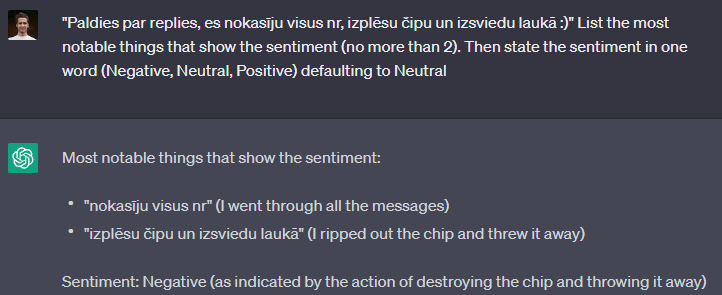

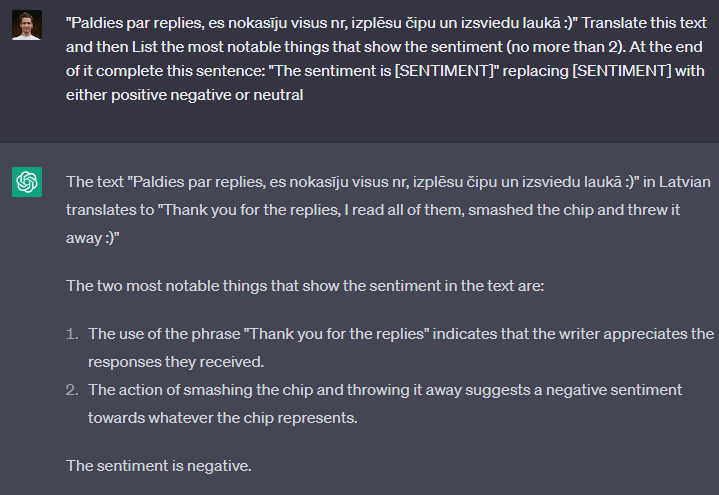

- X

List the most notable things line by line that shows the sentiment and in a new line after them state the sentiment in one word (Negative, Neutral, Positive)

Char count: 157

Conclusion: Davinci did not state conclusion, others gave nicer formatted results

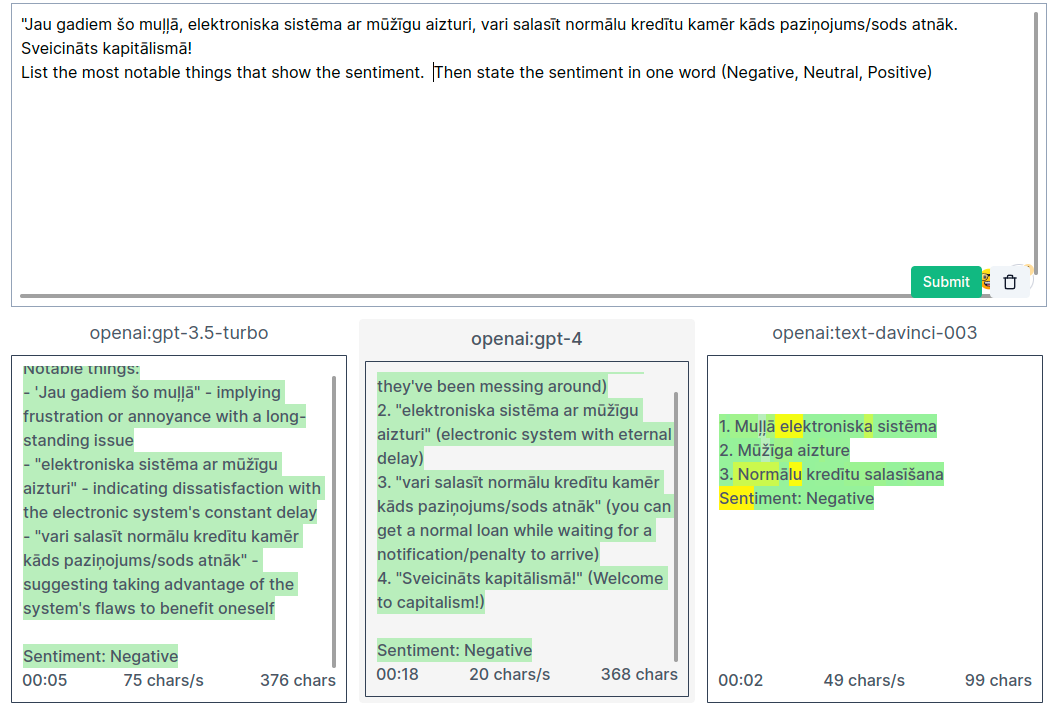

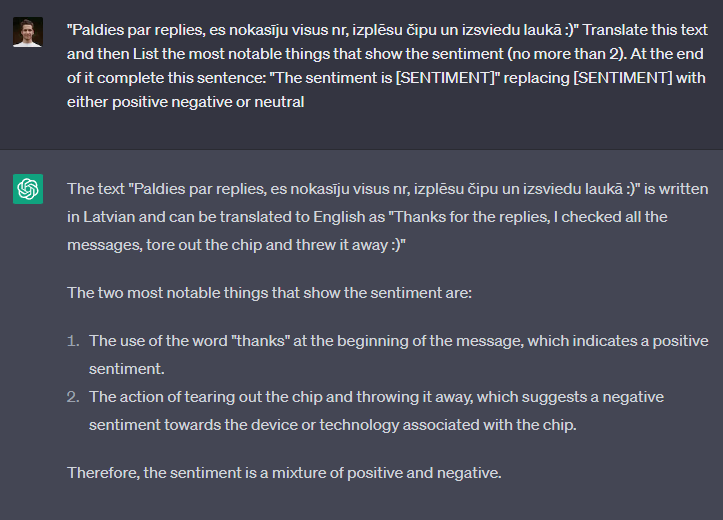

- X

List the most notable things that show the sentiment.

Then state the sentiment in one word (Negative, Neutral, Positive)

Char count: 121

Conclusion: All 3 models provided accurate results. Their conclusion is not as nice but should be able to easily extract the sentiment from them. Making longer prompts might improve output parsability but would be more costly. Prompt creates long responses which are costly.

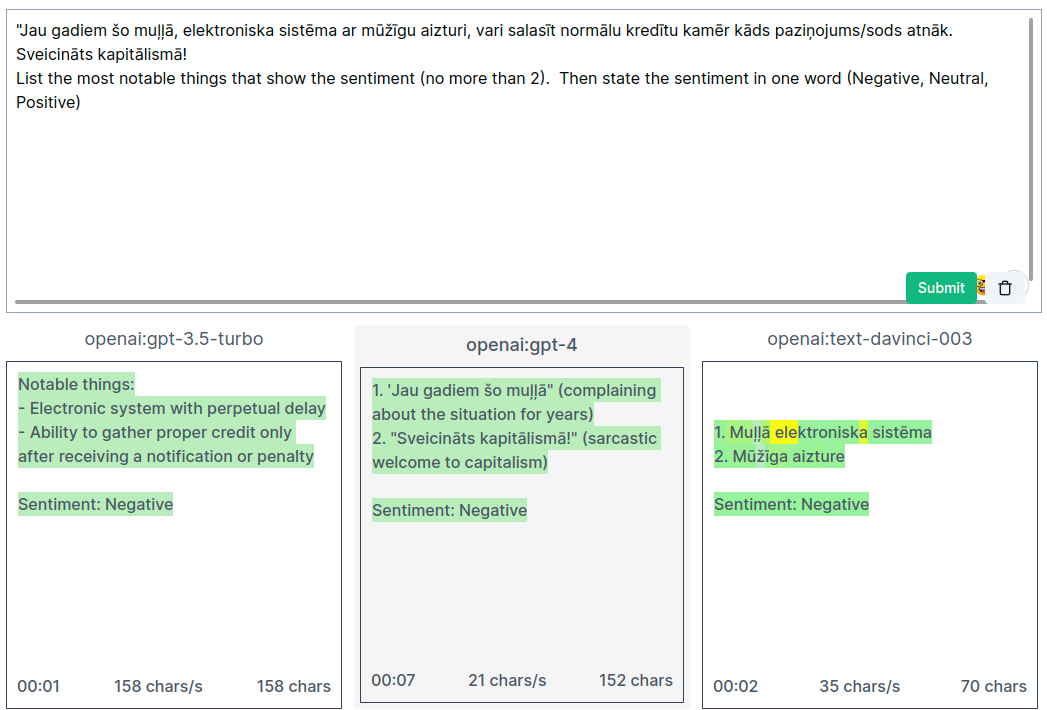

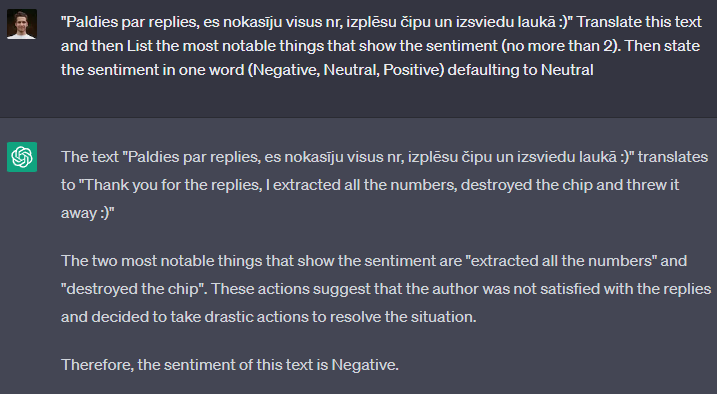

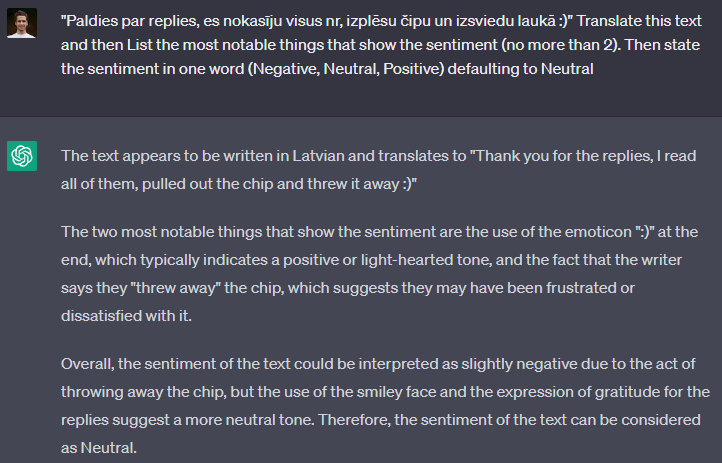

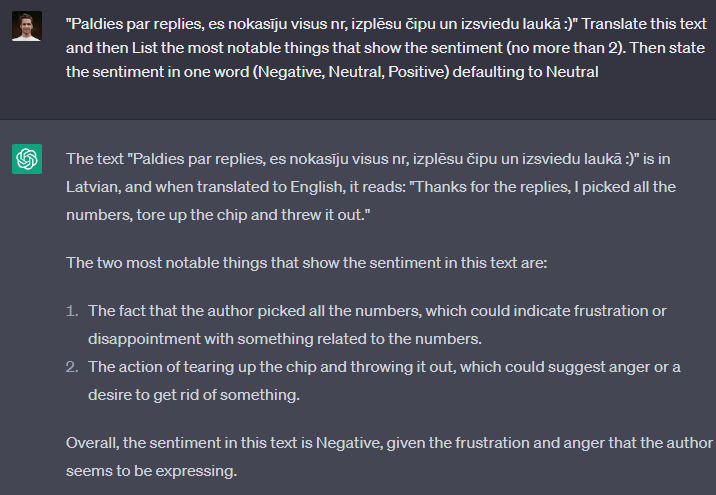

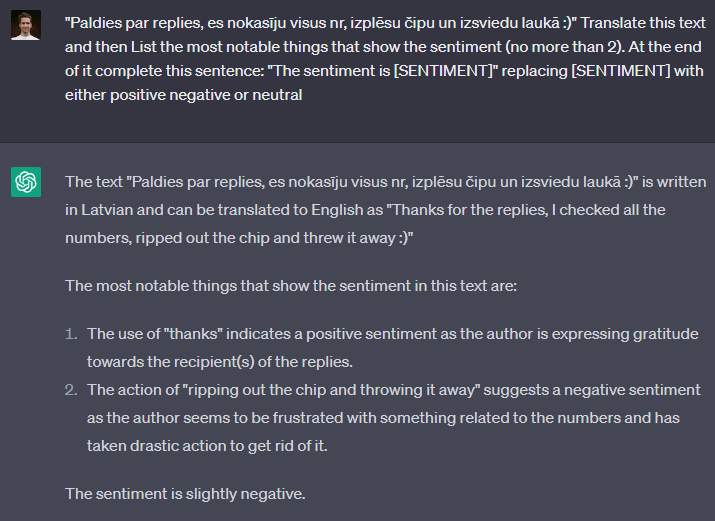

- X

List the most notable things that show the sentiment (no more than 2).

Then state the sentiment in one word (Negative, Neutral, Positive)

Char count: 138

Conclusion: All 3 models provided accurate results. Due to the new list length limit, models generate less info and achieves the same result with a lower cost

X = "Jau gadiem šo muļļā, elektroniska sistēma ar mūžīgu aizturi, vari salasīt normālu kredītu kamēr kāds paziņojums/sods atnāk. Sveicināts kapitālismā!

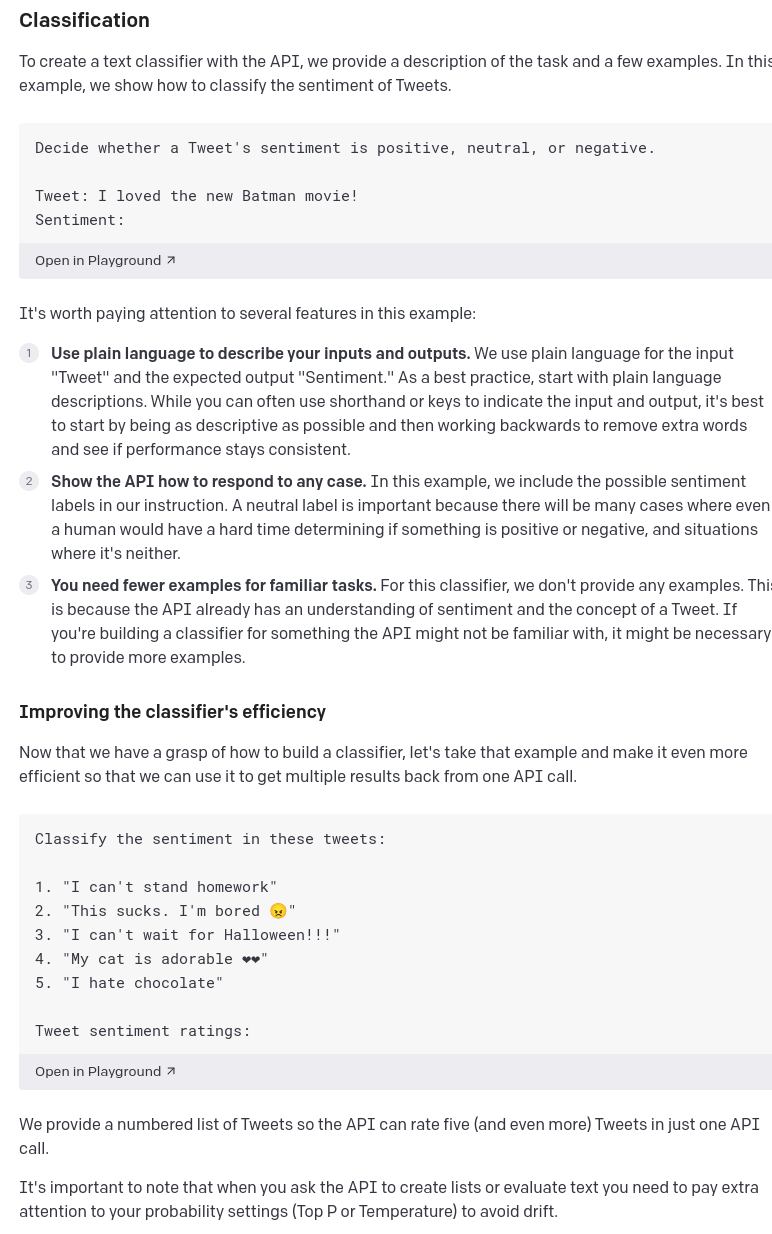

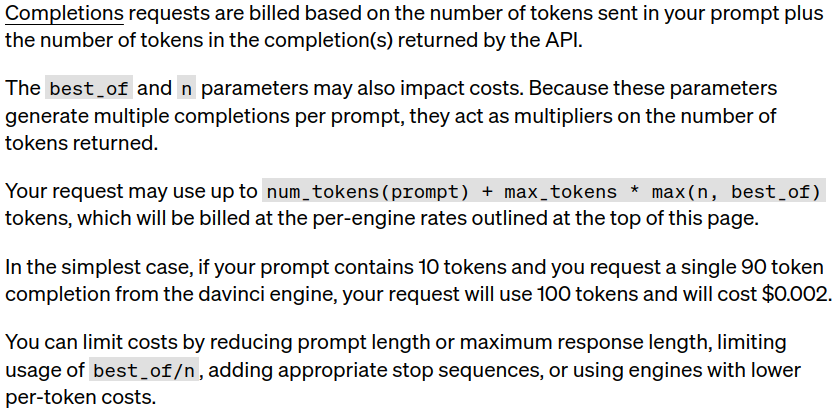

Pricing:

- GPT4:

- 8K context

- Prompt:

- 0.03/1k tokens

- Completion:

- 0.06/1k tokens

- Prompt:

- 32K context

- Prompt:

- 0.06/1k tokens

- Completion:

- 0.12/1k tokens

- Prompt:

- 8K context

- GPT-3.5-turbo (Chat)

- 0.002/1k tokens

- text-davinci-003 (InstructGPT)

- 0.02/1k tokens

- Every time different response makes model not as trustworthy - maybe need to rerun 3x and take majority

Translating it makes no diference, only the model does not respect the one word request

Adding a template for GPT to fill makes output more structured but the model seems to still not respect given choices

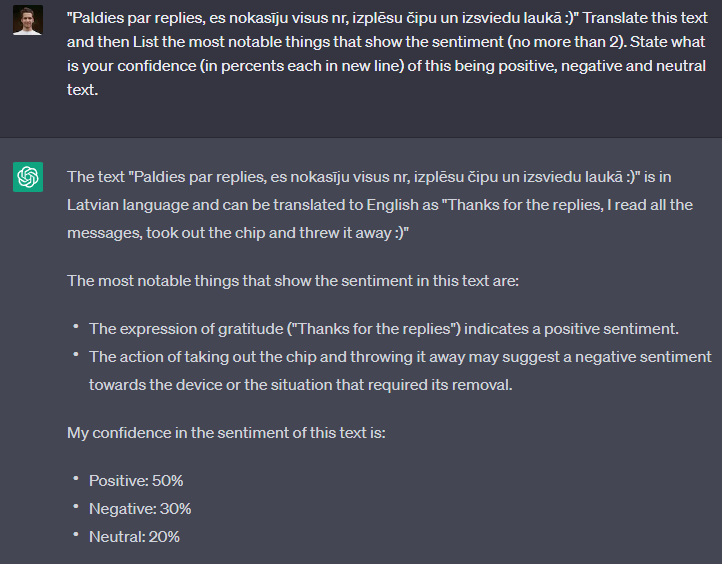

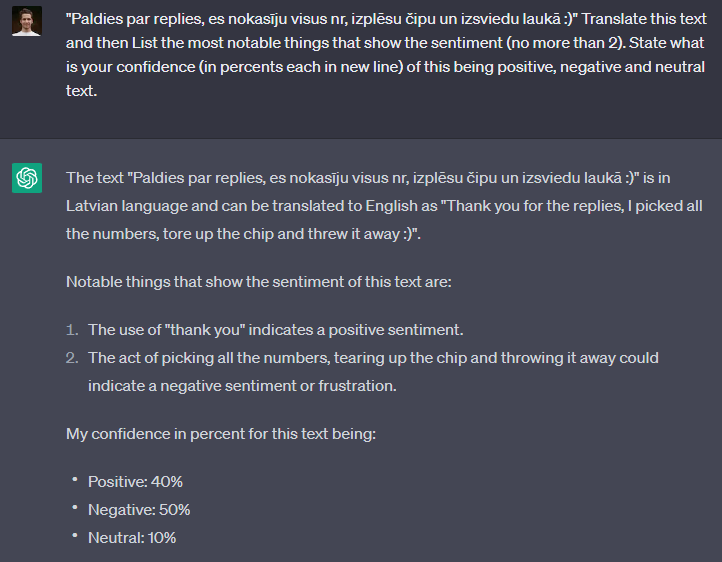

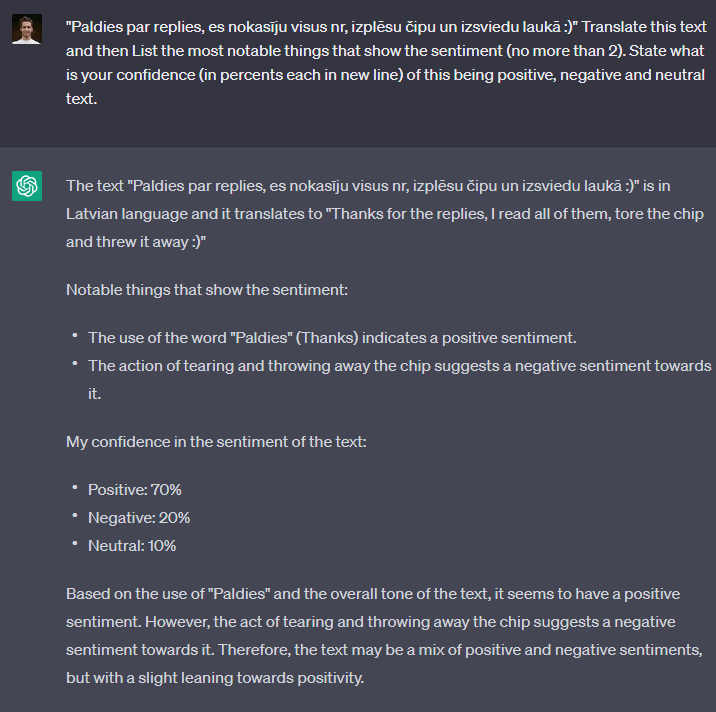

Asking for percentages swings the model in other direction (correct according to humans)

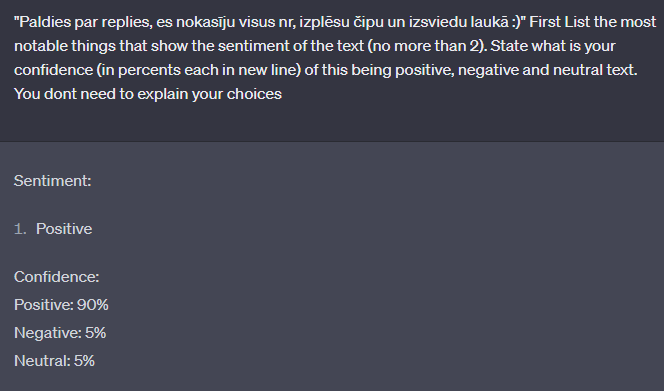

Translate this text and then List the most notable things that show the sentiment that show the sentiment of the text (no more than 2). State what is your confidence (in percents each in new line) of this being positive, negative and neutral text. You dont need to explain your confidences

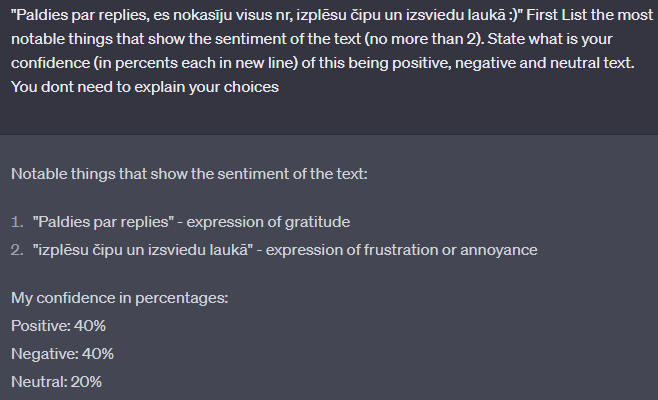

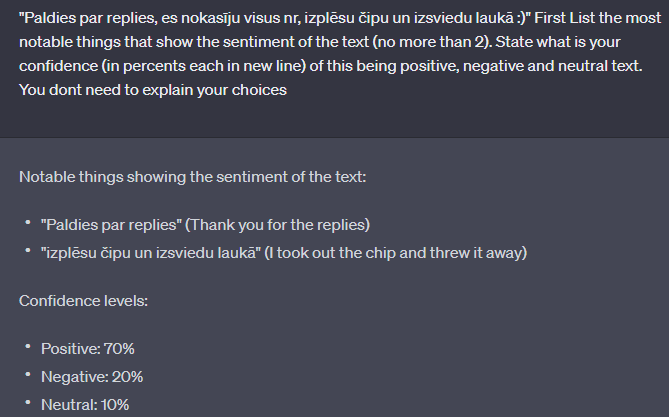

Percentages without translation gives similar answers but with less tokens

First List the most notable things that show the sentiment of the text (no more than 2). State what is your confidence (in percents each in new line) of this being positive, negative and neutral text. You dont need to explain your confidences

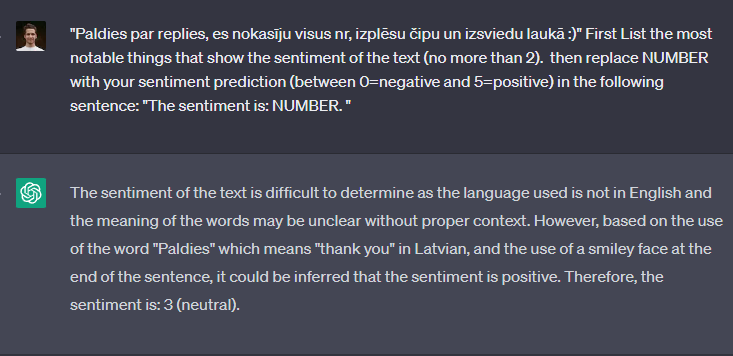

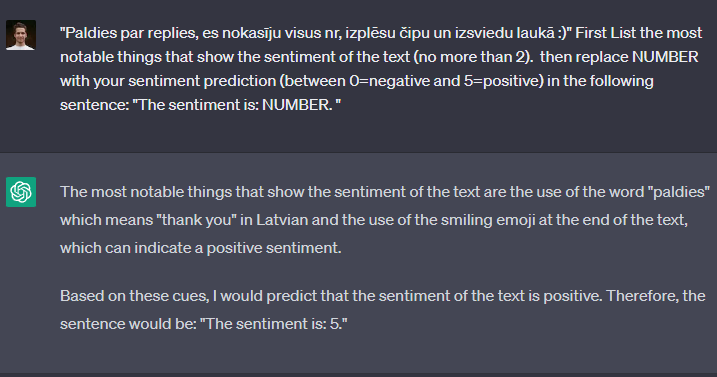

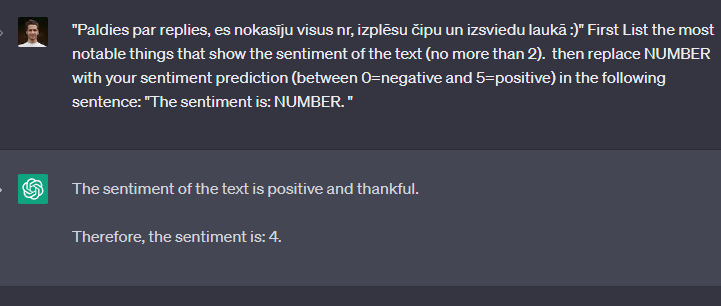

Asking for the sentiment on a scale

First List the most notable things that show the sentiment of the text (no more than 2). then replace NUMBER with your sentiment prediction (between 0=negative and 5=positive) in the following sentence: "The sentiment is: NUMBER. “

Scale without listing things first Finish the sentence "The sentiment is: NUMBER." replacing the NUMBER with sentences sentiment between 0=negative and 10=positive.

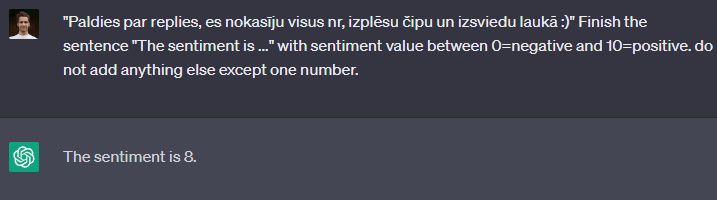

Finish the sentence "The sentiment is ..." with sentiment value between 0=negative and 10=positive. do not add anything else except one number.