2025-Q1-AI 18. GAN, DCGAN, WGAN

18.1. Materials / Video (🔴10. jūnijs 18:00, Trešdiena, Riga, Zunda krastmala 10, 122)

Zoom: https://zoom.us/j/3167417956?pwd=Q2NoNWp2a3M2Y2hRSHBKZE1Wcml4Zz09

Materiāli:

https://arxiv.org/pdf/1406.2661.pdf

https://arxiv.org/pdf/1511.06434.pdf5.2

Kods no iepriekšējiem gadiem: https://share.yellowrobot.xyz/quick/2024-5-28-3965B5B9-F3E9-40A6-8A73-953EE61402BA.zip

Iepriekšējā gada Video:

https://youtube.com/live/rD1R-GkrCxk?feature=share

Video: https://youtube.com/live/84eI97UjrDI?feature=share

Iepriekšējā gada Video: https://youtu.be/ftnIzUdNPYY

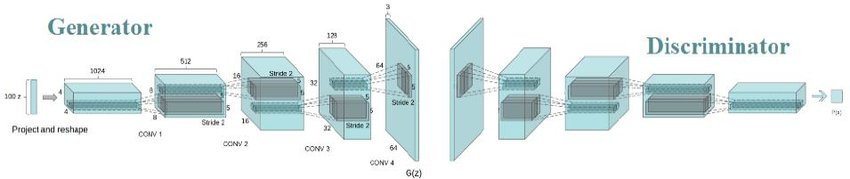

18.2. Implementēt DCGAN

Implementēt DCGAN balstoties uz video instrukcijām

Sagatave: http://share.yellowrobot.xyz/quick/2023-5-18-EB1F688E-2225-47FD-BE8F-7FDE6255AAC4.zip

Iesniegt ekrānšāviņus ar labākajiem rezultātiem un programmas pirmkodu.

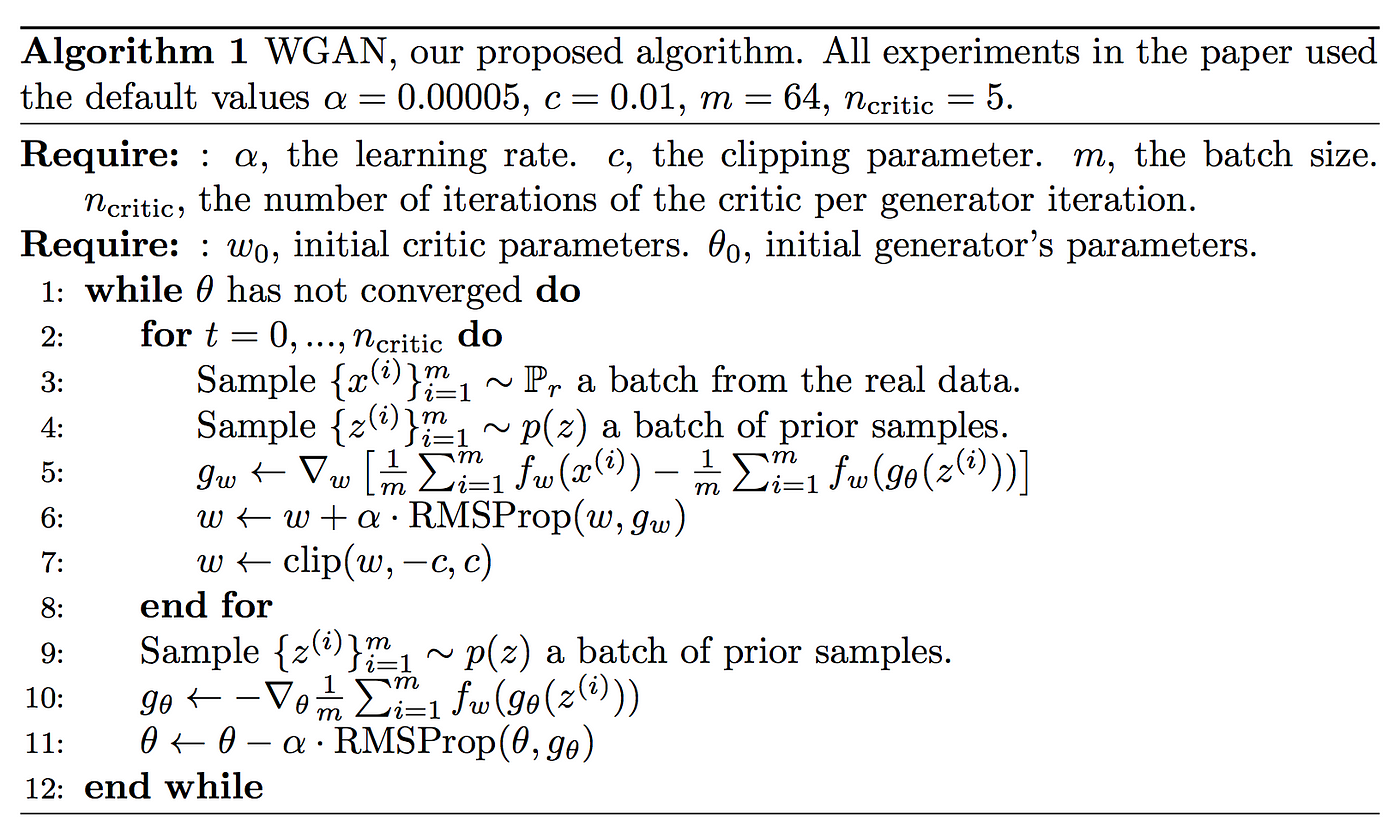

18.3 Implementēt WGAN

Implementēt WGAN balstoties uz video instrukcijām, izmantot iepriekšējo sagatavi

Implement WGAN based on video from 5.1. Use previous template.

Iesniegt ekrānšāviņus ar labākajiem rezultātiem un programmas pirmkodu.

18.4. Implementēt WGAN ar uzlabojumiem

Implementēt, izmantojot LFW datu kopu seju ģenerēšanai: https://scikit-learn.org/stable/modules/generated/sklearn.datasets.fetch_lfw_people.html

Implementēt GAN hacks (Izvēlēties un pierakstīt pirmkoda komentāros 2 hacks): https://github.com/soumith/ganhacks#authors https://developers.google.com/machine-learning/gan/problems For example: 2.1. Implement soft labels 2.2. Implement Batches in separate passes of optimizer.step for x_real and x_fake

Iesniegt ekrānšāviņus ar labākajiem rezultātiem un programmas pirmkodu.

https://www.linkedin.com/pulse/exploring-stylegan-breakthrough-ai-powered-image-arpit-vaghela-6mzrc/

https://datascience.stackexchange.com/questions/32671/gan-vs-dcgan-difference

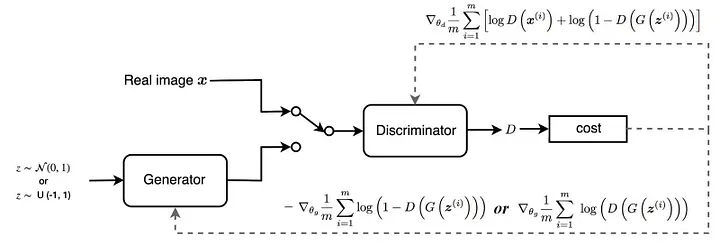

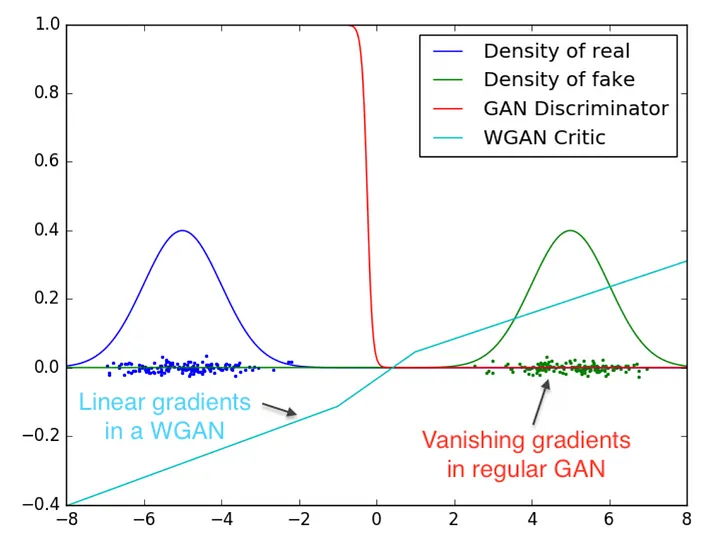

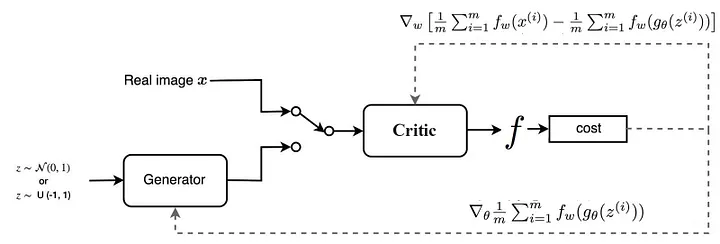

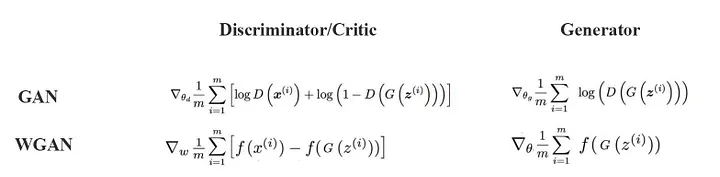

Wasserstein GAN

https://arxiv.org/pdf/1701.07875

⚠️ Šis strādā tikai kopā ar Gradient clipping citādi gradients dažu epohu laikā sabrūk

https://jonathan-hui.medium.com/gan-wasserstein-gan-wgan-gp-6a1a2aa1b490

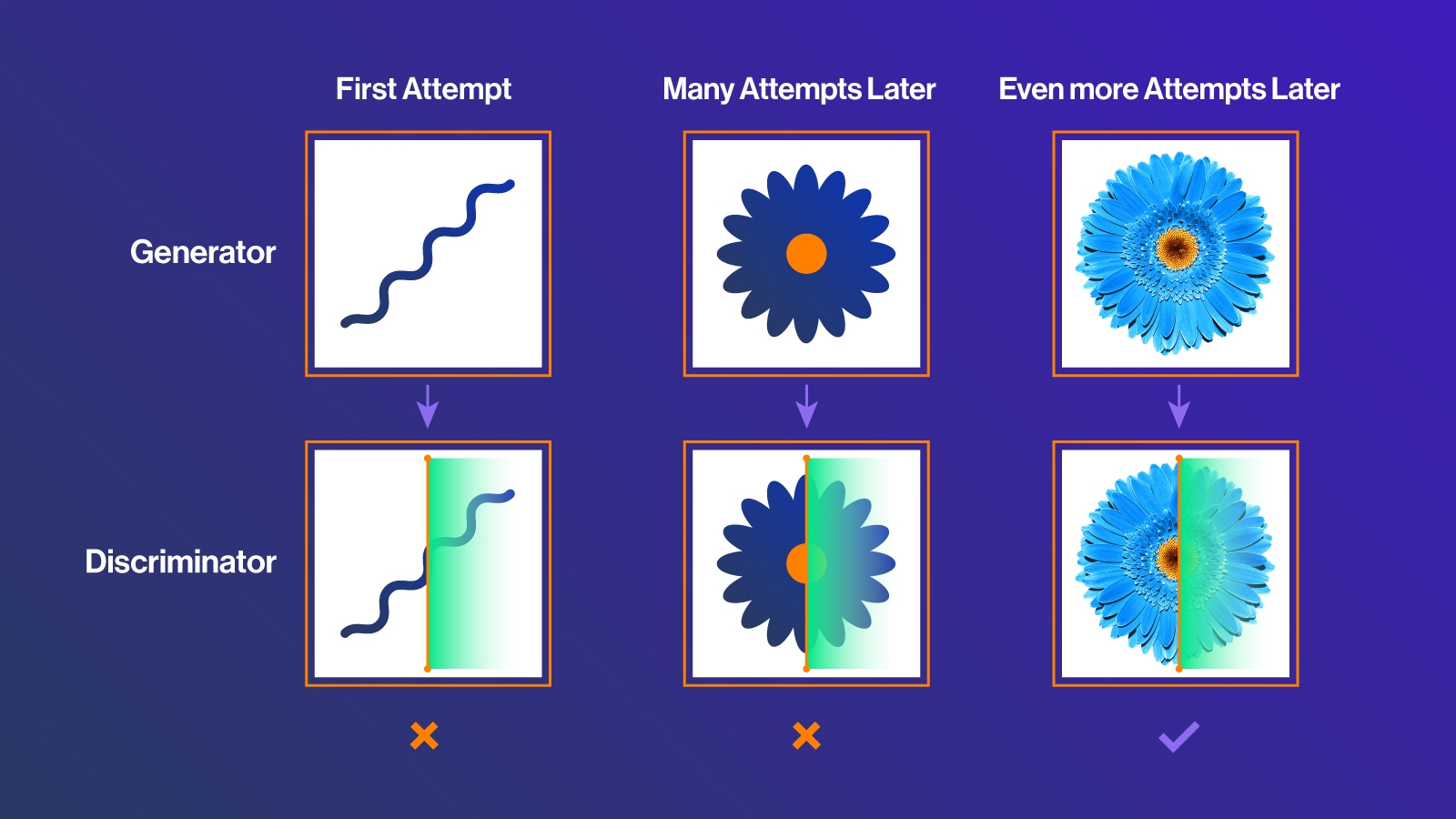

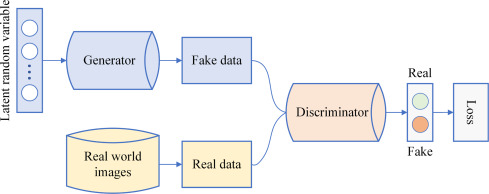

Gan - the more params the better

Discriminator - too many params, loss 0 killed, too little params loss large do not work

Changing learning rates etc. not good idea

Might help, but not needed:

Discriminator warmup

Discriminator history

Demonstrate mode collapse

Estimate quality by embeddings - deep metric, inception score etc

GAN common problems: https://developers.google.com/machine-learning/gan/problems

GAN hacks https://github.com/soumith/ganhacks#authors

Gan - the more params the better

Discriminator - too many params, loss 0 killed, too little params loss large do not work

Changing learning rates etc. not good idea

Might help, but not needed:

Discriminator warmup

Discriminator history

Demonstrate mode collapse

Estimate quality by embeddings - deep metric, inception score etc

GAN common problems: https://developers.google.com/machine-learning/gan/problems

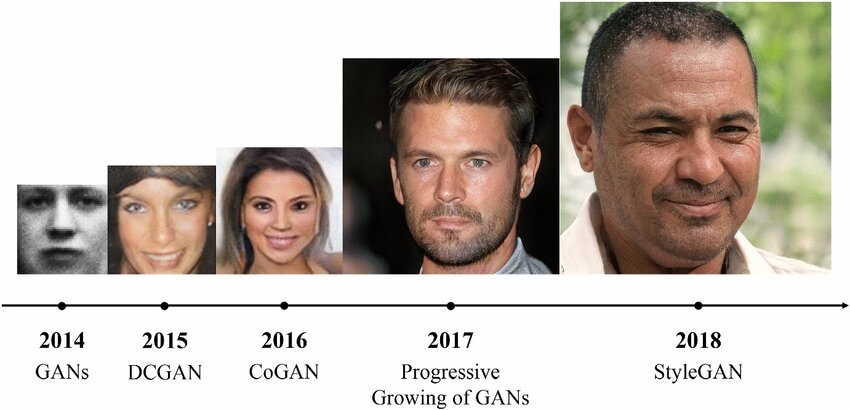

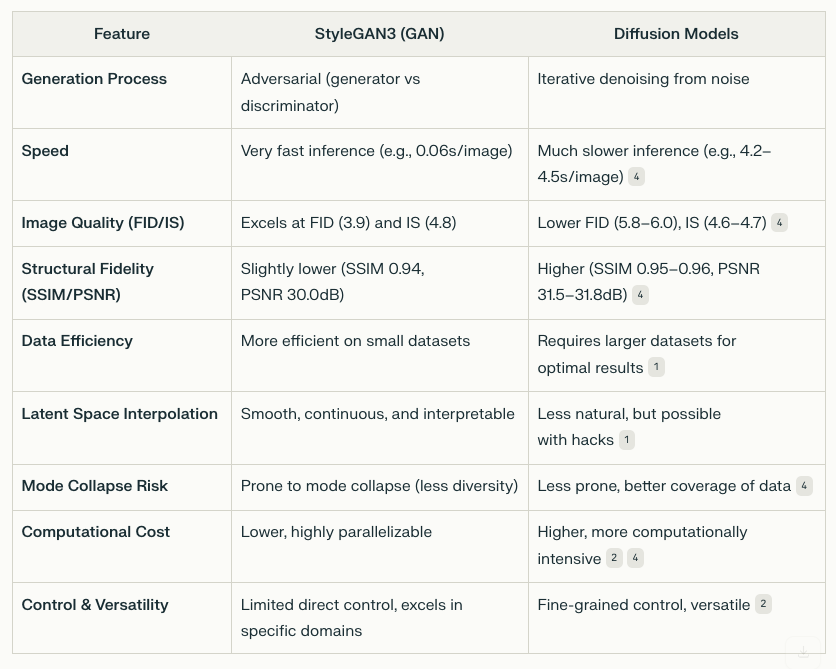

StyleGAN

SOTA šobrid:

StyleGAN3 was officially released by NVIDIA on October 12, 2021 https://nvlabs.github.io/stylegan3/?utm_source=chatgpt.com

Salīdzinājums ar Difussion models

https://www.sabrepc.com/blog/Deep-Learning-and-AI/gans-vs-diffusion-models